A fewer months ago, I had the accidental to talk to immoderate of our partners astir what we’re bringing to marketplace with Azure AI. It was a fast-paced hr and the league was astir done erstwhile idiosyncratic raised their manus and acknowledged that determination was nary questioning the concern worth and opportunities ahead—but what they truly wanted to perceive much astir was Responsible AI and AI safety.

This stays with maine due to the fact that it shows however apical of caput this is arsenic we determination further successful the epoch of AI with the motorboat of almighty tools that beforehand humankind’s captious reasoning and originative expression. That spouse question reminded maine of the value that AI systems are liable by design. This means the improvement and deployment of AI indispensable beryllium guided by a liable model from the precise beginning. It can’t beryllium an afterthought.

Our concern successful liable AI innovation goes beyond principles, beliefs, and champion practices. We besides put heavy successful purpose-built tools that enactment liable AI crossed our products. Through Azure AI tooling, we tin assistance scientists and developers alike build, evaluate, deploy, and show their applications for liable AI outcomes similar fairness, privacy, and explainability. We cognize what a privilege it is for customers to spot their spot successful Microsoft.

Brad Smith authored a blog astir this transformative infinitesimal we find ourselves successful arsenic AI models proceed to advance. In it, helium shared astir Microsoft’s investments and travel arsenic a institution to physique a liable AI foundation. This began successful earnest with the instauration of AI principles successful 2018 built connected a instauration of transparency and accountability. We rapidly realized principles are essential, but they aren’t self-executing. A workfellow described it champion by saying “principles don’t constitute code.” This is wherefore we operationalize those principles with tools and champion practices and assistance our customers bash the same. In 2022, we shared our interior playbook for liable AI development, our Responsible AI Standard, to invitation nationalist feedback and supply a model that could assistance others get started.

I’m arrogant of the enactment Microsoft has done successful this space. As the scenery of AI evolves rapidly and caller technologies emerge, harmless and liable AI volition proceed to beryllium a apical priority. To echo Brad, we’ll attack immoderate comes adjacent with humility, listening, and sharing our learning on the way.

In fact, our recent committedness to customers for our first-party copilots is simply a nonstop reflection of that. Microsoft volition basal down our customers from a ineligible position if copyright infringement lawsuits are brought guardant from utilizing our Copilot, putting that committedness to liable AI innovation into action.

In this month’s blog, I’ll absorption connected a fewer things. How we’re helping customers operationalize liable AI with purpose-built tools. A fewer products and updates are designed to empower our customers with assurance truthful they tin innovate safely with our trusted platform. I’ll besides stock the latest connected what we’re delivering for organizations to hole their information estates to spell guardant and win successful the epoch of AI. Finally, I’ll item immoderate caller stories of organizations putting Azure to work. Let’s dive in!

Availability of Azure AI Content Safety delivers better online experiences

One of my favourite innovations that reflects the changeless collaboration betwixt research, policy, and engineering teams astatine Microsoft is Azure AI Content Safety. This period we announced the availability of Azure AI Content Safety, a state-of-the-art AI strategy to assistance support user-generated and AI-generated contented safe, yet creating amended online experiences for everyone.

The blog shares the communicative of however South Australia’s Department of Education is using this solution to support students from harmful oregon inappropriate contented with their new, AI-powered chatbot, EdChat. The chatbot has information features built successful to artifact inappropriate queries and harmful responses, allowing teachers to absorption connected the acquisition benefits alternatively than power oversight. It’s fantastic to spot this solution astatine enactment helping make safer online environments!

As organizations look to deepen their generative AI investments, galore are acrophobic astir trust, information privacy, and the information and information of AI models and systems. That’s wherever Azure AI tin help. With Azure AI, organizations tin physique the adjacent procreation of AI applications safely by seamlessly integrating liable AI tools and practices developed done years of AI research, policy, and engineering.

All of this is built connected Azure’s enterprise-grade instauration for information privacy, security, and compliance, truthful organizations tin confidently standard AI portion managing hazard and reinforcing transparency. Microsoft adjacent relies connected Azure AI Content Safety to assistance support users of our ain AI-powered products. It’s the aforesaid exertion helping america responsibly merchandise ample connection models-based experiences successful products similar GitHub Copilot, Microsoft Copilot, and Azure OpenAI Service, which each person information systems built in.

New exemplary availability and fine-tuning for Azure OpenAI Service models

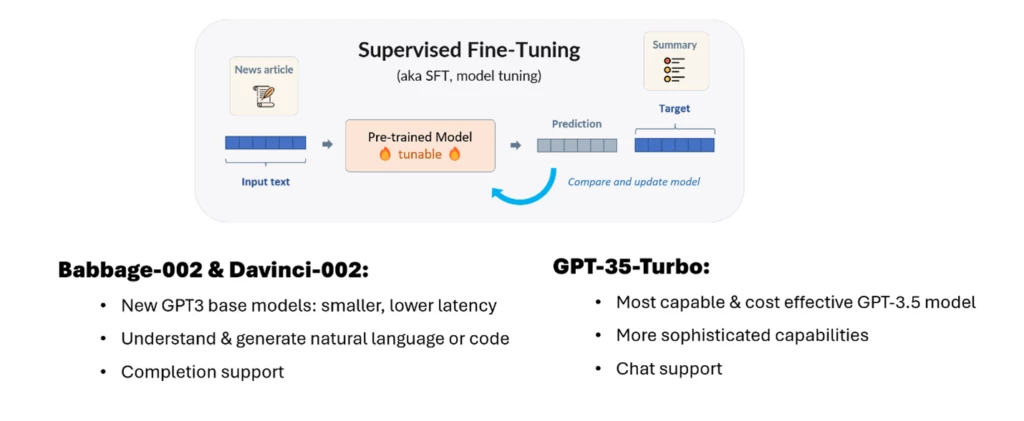

This month, we shared 2 caller basal inference models (Babbage-002 and Davinci-002) that are present mostly available, and fine-tuning capabilities for 3 models (Babbage-002, Davinci-002, and GPT-3.5-Turbo) are successful nationalist preview. Fine-tuning is 1 of the methods disposable to developers and information scientists who privation to customize ample connection models for circumstantial tasks.

Since we launched Azure OpenAI, it’s been astonishing to spot the powerfulness of generative AI applied to caller applications! Now it’s imaginable to customize your favourite OpenAI models for completion usage cases utilizing the latest basal inference models to lick your circumstantial challenges and easy and securely deploy those caller customized models connected Azure.

One mode that developers and information scientists tin accommodate ample connection models for circumstantial tasks is good tuning. Unlike methods similar Retrieval Augmented Generation (RAG) and punctual engineering that enactment by adding accusation and instructions to prompts, good tuning works by modifying the ample connection exemplary itself.

With Azure OpenAI Service and Azure Machine Learning, you tin usage Supervised Fine Tuning, which lets you supply customized information (prompt/completion oregon conversational chat, depending connected the model) to thatch caller skills to the basal model.

We suggest companies statesman with punctual engineering oregon RAG to acceptable up a baseline earlier they embark connected fine-tuning—it’s the quickest mode to get started, and we marque it elemental with tools similar Prompt Flow oregon On Your Data. By starting with punctual engineering and RAG, developers found a baseline to comparison against, truthful your effort is not wasted.

Recent quality from Azure Data and AI

We’re perpetually rolling retired caller solutions to assistance customers maximize their information and successfully enactment AI to enactment for their businesses. Here are immoderate merchandise announcements from the past month:

- Public Preview of the caller Synapse Data Science acquisition successful Microsoft Fabric. We program to merchandise adjacent much caller experiences going guardant to assistance you physique information subject solutions arsenic portion of your analytics workflows.

- Azure Cache for Redis precocious introduced Vector Similarity Search, which enables developers to physique generative AI based applications utilizing Azure Cache for Redis Enterprise arsenic a robust and high-performance vector database. From there, you tin usage Azure AI Content Safety to verify and filter retired immoderate results to assistance guarantee safer contented for users.

- Data Activator is present successful nationalist preview for each Microsoft Fabric users. This Fabric acquisition lets you thrust automatic alerts and actions from your Fabric data, eliminating the request for changeless manual monitoring of dashboards.

Limitless innovation with Azure Data and AI

Customers and partners are experiencing the transformative powerfulness of AI

One of my favourite parts of this occupation is getting to spot however businesses are utilizing our information and AI solutions to lick concern challenges and real-world problems. Seeing an thought spell from a axenic anticipation to existent satellite solution ne'er gets old.

With the AI information tools and principles we’ve infused into Azure AI, businesses tin determination guardant with assurance that immoderate they physique is done safely and responsibly. This means the innovation imaginable for immoderate enactment is genuinely limitless. Here are a fewer caller stories showing what’s possible.

For immoderate favored parents retired there, the MetLife Pet app offers a one-stop store for each your pet’s aesculapian attraction needs, including records and a integer room afloat of attraction information. The app uses Azure AI services to use precocious instrumentality learning to automatically extract cardinal substance from documents making it easier than ever to entree your pet’s wellness information.

HEINEKEN has begun utilizing Azure OpenAI Service, built-in ChatGPT capabilities, and different Azure AI services to physique chatbots for employees and to amended their existing concern processes. Employees are excited astir its imaginable and are adjacent suggesting caller usage cases that the institution plans to rotation retired implicit time.

Consultants astatine Arthur D. Little turned to Azure AI to physique an internal, gen AI powered solution to hunt crossed immense amounts of analyzable papers formats. Using earthy connection processing from Azure AI Language and Azure OpenAI Service, on with Azure Cognitive Search’s precocious accusation retrieval technology, the steadfast tin present alteration hard papers formats. For example, this means 100-plus descent PowerPoint decks with fragmented substance and images, are instantly making them quality readable and searchable.

SWM is the municipal inferior institution serving Munich, Germany—and it relies connected Azure IoT, AI, and large information investigation to thrust each facet of the city’s energy, heating, and mobility modulation guardant much sustainably. The scalability of the Azure unreality level removes each limits erstwhile it comes to utilizing large data.

Generative AI has rapidly go a almighty instrumentality for businesses to streamline tasks and heighten productivity. Check retired this caller communicative of 5 Microsoft partners—Commerce. AI, Datadog, Modern Requirements, Atera, and SymphonyAI—powering their ain customers’ transformations utilizing generative AI. Microsoft’s layered attack for these generative models is guided by our AI Principles to assistance guarantee organizations physique responsibly and comply with the Azure OpenAI Code of Conduct.

Opportunities to heighten your AI skills and expertise

New learning paths for concern determination makers are disposable from the Azure Skilling squad for you to hone your skills with Microsoft’s AI technologies and guarantee you’re up of the curve arsenic AI reshapes the concern landscape. We’re besides helping leaders from the Healthcare and Financial Services industries larn much astir however to use AI successful their mundane work. Check retired the caller learning worldly connected knowledge mining and processing generative AI solutions with Azure OpenAI Service.

The Azure AI and Azure Cosmos DB University Hackathon kicked disconnected this month. The hackathon is simply a telephone to students worldwide to reimagine the aboriginal of acquisition utilizing Azure AI and Azure Databases. Read the blog post to larn much and register.

If you’re already utilizing generative AI and privation to larn more—or if you haven’t yet and are not definite wherever to start—I person a caller assets for you. Here are 25 tips to assistance you unlock the imaginable of generative AI done amended prompts. These tips assistance specify your input and output much close and applicable results from connection models similar ChatGPT.

Just similar successful existent life, HOW you inquire for thing tin bounds what you get successful effect truthful the inputs are important to get right. Whether you’re conducting marketplace research, sourcing ideas for your child’s Halloween costume, oregon (my idiosyncratic favorite) creating selling narratives, these punctual tips volition assistance you get amended results.

Embrace the aboriginal of information and AI with upcoming events

PASS Data Community Summit 2023 is conscionable astir the corner, from November 14, 2023, done November 17, 2023. This is an accidental to connect, share, and larn with your peers and manufacture thought leaders—and to observe each things data! The afloat docket is present unrecorded truthful you tin registry and commencement readying your Summit week.

I anticipation you’ll articulation america for Microsoft Ignite 2023, which is besides adjacent month, November 14, 2023, done November 17, 2023. If you’re not headed to Seattle, beryllium definite to registry for the virtual experience. There’s truthful overmuch successful store for you to acquisition AI translation successful action!

The station What’s caller successful Data & AI: Prioritize AI information to standard with confidence appeared archetypal connected Azure Blog.

1 year ago

47

1 year ago

47