Cybersecurity startup Simbian has launched three LLM AI Agents that work as virtual employees but with the speed, stamina, and accuracy of robots.

Large Language Model (LLM) artificial intelligence – typified by OpenAI’s ChatGPT – has revolutionized and perhaps democratized the use of AI. While AI now offers many advantages to everyone, these advantages are primarily based on doing things better and faster.

Cybercriminals always adopt options that do things better and faster. Since the 2022 release of ChatGPT we have been waiting for LLMs to increase the speed and scale of criminal cyberattacks; and it has already begun. We know the only way to scale our defense to match the scale of AI-based attacks is to use the same AI.

But how? Cyberdefense has used machine learning based AI for more than a decade in areas such as anti-virus, anomaly / behavior detection, and EDR for more than a decade. It’s been a boon, but it will not cope with the expected growth and sophistication of LLM-based attacks. We need to scale up our AI defenses.

Here it is worth briefly looking at the relationship between ML and LLM, because although the latter offers greater potential, it is effectively just a specific application or type of ML. While this is by no means a scientific distinction, ML provides primarily binary decisions (yes/no answers) and requires relatively low computing power that can be provided in-house, while LLMs provide analog (conversational) decisions and require huge amounts of processing power that usually needs to be provided via the cloud.

As a result, ML can detect a potential problem, but cannot easily explain what it is, nor what should be done about it. Hence the growth in alerts and the well-known alert fatigue now suffered by security teams.

The solution is linguistically simple even if technically difficult – harness the power of LLMs to guide security teams to the correct course of action to remediate a detected issue.

This is the approach taken by Simbian with the release of three new AI agents based on LLM technology. The first is the SOC Agent. It autonomously investigates and responds to security alerts, and dramatically improves the security team’s mean time to respond.

Advertisement. Scroll to continue reading.

The second is the Threat Hunting Agent. Using LLMs’ ability to ingest free text, and respond similarly, it can increase the volume of threat information it can analyze. Intelligence feeds and verbose threat reports generate threat hunting hypotheses based on an actor’s TTPs. “It blocks, pivots, and hunts across the entire environment, providing comprehensive protection unlike traditional SIEMs and XDRs which are limited to the logs they store,” claims Simbian.

The third is the GRC Agent, which uses the LLM’s conversational capabilities to respond in areas like customers’ security questionnaires and third-party supply chain risk analyses. The result increases accuracy and dramatically reduces the time taken (Simbian suggests that tasks that would normally take more than three days can be done in less than an hour).

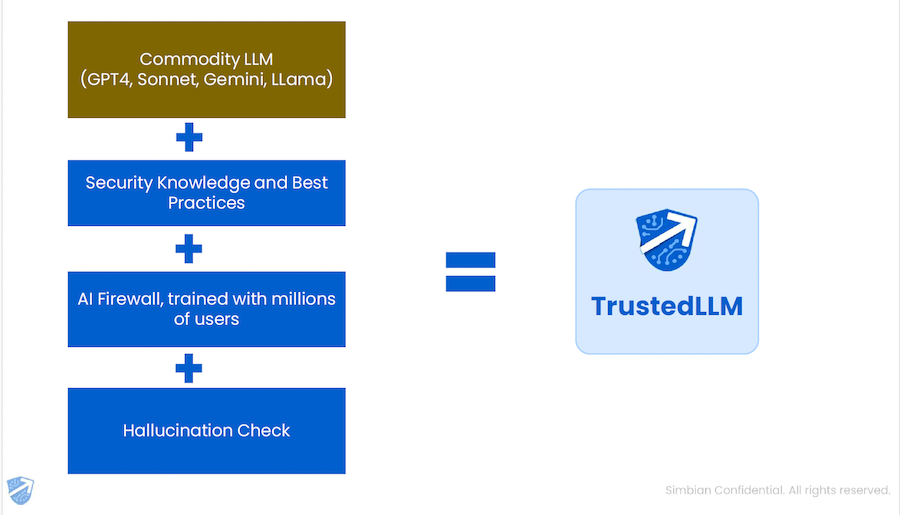

One of the dangers in using a commodity LLM (ChatGPT, Gemini, Llama, etcetera) directly is the possibility of leaking internal data. Simbian’s co-founder and CEO Ambuj Kumar, explains how his agents work and avoid this issue. The result is Simbian’s TrustedLLM.

“We start with the core LLM, but then we work on it, fine tuning context learning built for security, to provide our own TrustedLLM; itself built for a single purpose, to assist in security tasks.” He likened the process to a student going from High School to university, where all the silly learning gets unlearned and replaced by serious learning. “We start with the commodity LLM and add loads of security knowledge and best practices. We add an AI firewall, so anything that our TrustedLLM sees from outside of the domain, and anything it generates but can be seen outside the domain, is safe. And then we add our hallucination checks so we can see if it’s being silly and hallucinating. The combination of all this we call TrustedLLM.”

TrustedLLM doesn’t make existing ML investments redundant. Kumar explained the reasoning and advantage of doing so. “Early LLMs were poor at math,” he said. “They tried to generate a math result from stuff they had collected off the internet, which may have been wrong. Now they just recognize the query as mathematical and invoke a calculator to provide the correct result.”

TrustedLLM does the same with a domains’ existing security tools. “It will use CrowdStrike, it will use Palo Alto, it will use Cisco – it will use all the security tools and all the traditional machine learning tools that are available to it.” Just as commodity LLMs improved their ability to do math by using a calculator, so does Simbian’s LLM improve its security functionality by using the data already available.

Our AI Agents, says the firm, “help businesses get more out of these tools by connecting the dots and automating security operations at the last mile.”

Simbian emerged from stealth six months ago with $10 million seed funding. These AI Agents are the first fruits of its purpose to build an autonomous AI-based security platform.

Related: MITRE Announces AI Incident Sharing Project

Related: California Governor Vetoes Bill to Create First-in-Nation AI Safety Measures

Related: Sophistication of AI-Backed Operation Targeting Senator Points to Future of Deepfake Schemes

Related: Security Firm Shows How Threat Actors Could Abuse Google’s Gemini AI Assistant

3 months ago

38

3 months ago

38