Source: Krot Studio via Shutterstock

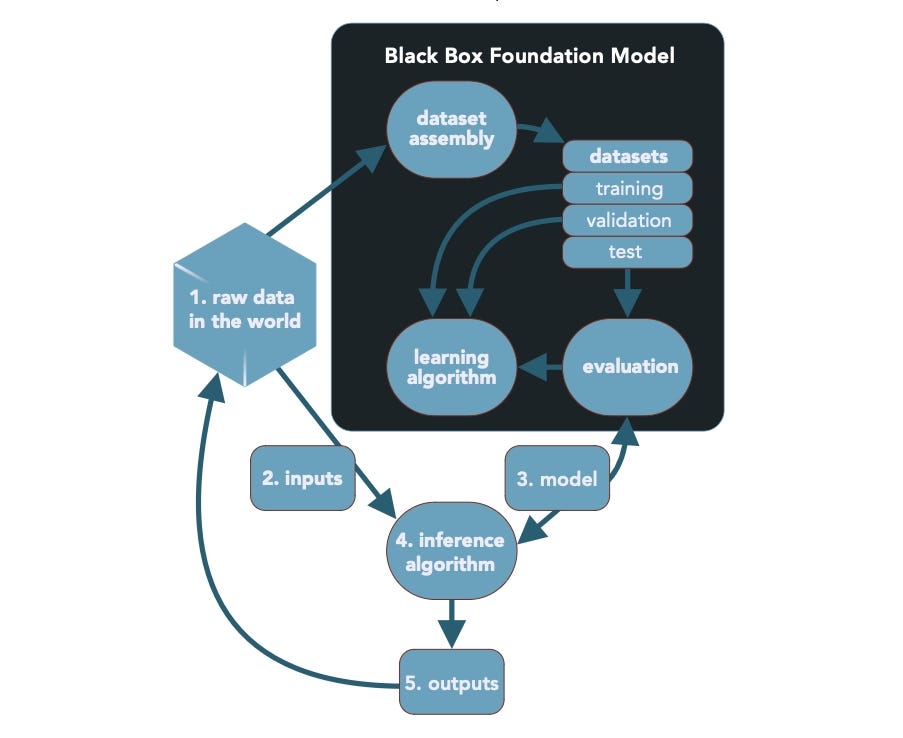

Many of the security problems of large language models (LLMs) boil down to a simple fact: The heart of all LLMs is a black box.

The end users of LLMs typically do not have a lot of information on how providers collected and cleaned the data used to train their models, and the model developers typically conduct only a shallow evaluation of the data because the volume of information is just too vast. This lack of visibility into how artificial intelligence (AI) makes decisions is the root cause of more than a quarter of risks posed by LLMs, according to a new report from the Berryville Institute of Machine Learning (BIML) that describes 81 risks associated with LLMs.

The goal of the report, "An Architectural Risk Analysis of Large Language Models," is to provide CISOs and other security practitioners with a way of thinking about the risks posed by machine learning (ML) and AI models, especially LLMs and the next-generation large multimodal models (LMMs), so they can identify those risks in their own applications, says Gary McGraw, co-founder of BIML.

"Those things that are in the black box are the risk decisions that are being made by Google and Open AI and Microsoft and Meta on your behalf without you even knowing what the risks are," McGraw says. "We think that it would be very helpful to open up the black box and answer some questions."

A Common Language for Adversarial AI

As businesses rush to adopt AI models, the risks and security of those models have become increasingly important. The researchers at BIML are not the only group attempting to bring order to the chaos of adversarial AI and ML security. In early January, the US National Institute of Standards and Technology (NIST) released the latest version of "Adversarial Machine Learning: A Taxonomy and Terminology of Attacks and Mitigations," which focuses on creating a common language for discussing threats to AI.

The NIST paper breaks down the AI landscape into two broad categories: models used to make predictions (PredAI) and models used to generate content (GenAI), such as LLMs. While predictive AI models suffer from the typical triad of threats — confidentiality, integrity and availability — generative AI systems also have to deal with abuse, such as prompt injection that accesses queries or resources, according to NIST.

Coming up with a common terminology is important as more researchers jump into the field, says Apostol Vassilev, research team manager in the computer security division at NIST and a co-author of the NIST report.

"Companies have incorporated generative AI into their main product lines now, and that new technology wave is going to hit the mass market soon," he says. "So it is important to figure out what's going on in machine learning so people know in advance what they're getting into and how best to prepare for this new phenomenon."

In the last two years, researchers have published more than 5,000 papers on adversarial machine learning to the online publishing platform ArXiv, according to NIST. The previous decade saw fewer than 3,500 papers published between 2011 and 2020.

The Black Box of LLMs

A large portion of the creation of GenAI and many other ML models boil down to analytical hacks, says BIML's McGraw. Large data models are about training a machine to use data to correlate between inputs and outputs without the developers or ML experts knowing how to write an explicit program to accomplish the task.

Many of the steps in creating an LLM are unknown and potentially unknowable. Source: BIML

"The recourse you have is to get a huge pile of 'what' and to train a machine to become the 'what' — and that's what machine learning is for," he says. "The problem is, when we do that with a big pile of 'what,' we still don't know how it works. So anything we can do to understand where that big pile of 'what' came from, the better off we're going to be."

In the BIML paper," researchers built on the 78 risks previously identified in the institute's general ML report. The researchers identified a set 81 risks that LLMs face and classified 23 as directly related to black-box issues, BIML's McGraw says.

"We are not anti-machine learning," he says. "We don't say throw out the baby [with the bath water]. We say, why don't you scrub that baby? Wait a minute, tell us exactly, where did you get the tub?"

Government regulations are needed because defenses are nearly impossible to create when much of the architecture of an LLM is in a black box. Regulators should focus on establishing rules for the black box and the LLM foundation models, not the users of those models, McGraw says.

"[T]he companies who create LLM foundation models should be held accountable for the risks they have brought into the world," the BIML researchers state in the paper. "We further believe that trying to constrain the use of LLMs by controlling their use without controlling their very design and implementation is folly."

Better Defenses, Better Policy

While defenses are basic, so are attacks because attackers — for the most part — can more easily accomplish their goals with a traditional cyberattack than by targeting AI. Typically, attackers targeting AI models are likely after one of three things: They want to trick the model, steal intellectual property from the model, or manipulate or extract data from the model, says Daryan Dehghanpisheh, president and founder of Protect AI.

"If I'm the bad actor and I want to achieve any one of those three things, we think that there are a whole lot of easier ways to do that," he says. "Hunting the ML or AI engineers of a company, going out and scraping all their LinkedIn profiles ... and then masquerading as some other entity and social engineering them."

Defending models is also very difficult. Current approaches, which essentially try to put guardrails around LLMs, are easy to circumvent because malicious prompts can eventually bypass any set of rules, NIST's Vassilev says.

"It turns out that practically all the mitigations that are out there are limited and incomplete," he says. "We're going to be in a cat-and-mouse game between attackers and defenders for a while until we figure out better lines of defenses and better techniques for how to protect ourselves."

10 months ago

43

10 months ago

43