Microsoft Azure has delivered industry-leading results for AI inference workloads among unreality work providers successful the astir recent MLPerf Inference results published publically by MLCommons. The Azure results were achieved utilizing the caller NC H100 v5 series virtual machines (VMs) powered by NVIDIA H100 NVL Tensor Core GPUs and reinforced the committedness from Azure to designing AI infrastructure that is optimized for grooming and inferencing successful the cloud.

The improvement of Generative AI models

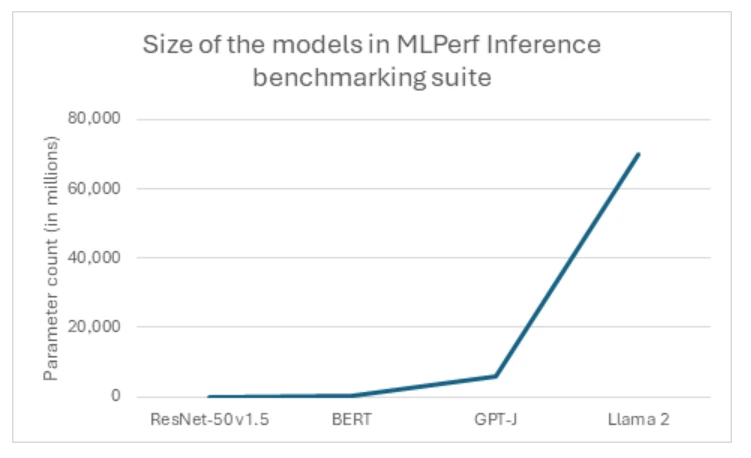

Models for generative AI are rapidly expanding successful size and complexity, reflecting a prevailing inclination successful the manufacture toward ever-larger architectures. Industry-standard benchmarks and cloud-native workloads consistently propulsion the boundaries, with models present reaching billions and adjacent trillions of parameters. A premier illustration of this inclination is the caller unveiling of Llama2, which boasts a staggering 70 cardinal parameters, marking it arsenic MLPerf’s astir important trial of generative AI to day (figure 1). This monumental leap successful exemplary size is evident erstwhile comparing it to erstwhile manufacture standards specified arsenic the Large Language Model GPT-J, which pales successful examination with 10x less parameters. Such exponential maturation underscores the evolving demands and ambitions wrong the AI industry, arsenic customers strive to tackle progressively analyzable tasks and make much blase outputs.

Tailored specifically to code the dense oregon generative inferencing needs that models similar Llama 2 require, the Azure NC H100 v5 VMs marks a important leap guardant successful show for generative AI applications. Its purpose-driven plan ensures optimized performance, making it an perfect prime for organizations seeking to harness the powerfulness of AI with reliability and efficiency. With the NC H100 v5-series, customers tin expect enhanced capabilities with these caller standards for their AI infrastructure, empowering them to tackle analyzable tasks with easiness and efficiency.

Figure 1: Evolution of the size of the models successful the MLPerf Inference benchmarking suite.

Figure 1: Evolution of the size of the models successful the MLPerf Inference benchmarking suite. However, the modulation to larger exemplary sizes necessitates a displacement toward a antithetic people of hardware that is susceptible of accommodating the ample models connected less GPUs. This paradigm displacement presents a unsocial accidental for high-end systems, highlighting the capabilities of precocious solutions similar the NC H100 v5 series. As the manufacture continues to clasp the epoch of mega-models, the NC H100 v5 bid stands acceptable to conscionable the challenges of tomorrow’s AI workloads, offering unparalleled show and scalability successful the look of ever-expanding exemplary sizes.

Enhanced show with purpose-built AI infrastructure

The NC H100 v5-series shines with purpose-built infrastructure, featuring a superior hardware configuration that yields singular show gains compared to its predecessors. Each GPU wrong this bid is equipped with 94GB of HBM3 memory. This important summation successful representation capableness and bandwidth translates successful a 17.5% boost successful representation size and a 64% boost successful representation bandwidth implicit the erstwhile generations. . Powered by NVIDIA H100 NVL PCIe GPUs and 4th-generation AMD EPYC™ Genoa processors, these virtual machines diagnostic up to 2 GPUs, alongside up to 96 non-multithreaded AMD EPYC Genoa processor cores and 640 GiB of strategy memory.

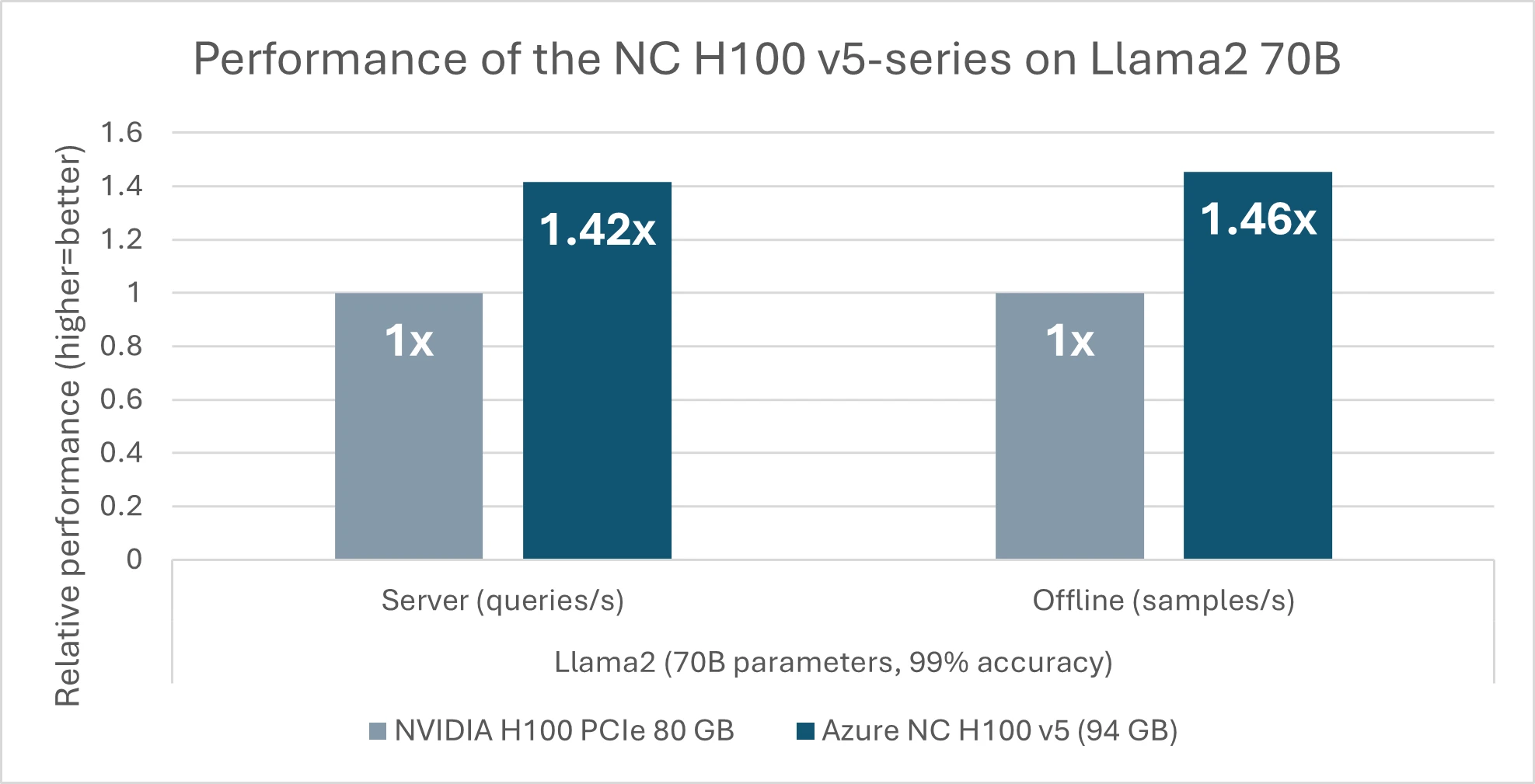

In today’s announcement from MLCommons, the NC H100 v5 bid premiered show results successful the MLPerf Inference v4.0 benchmark suite. Noteworthy among these achievements is simply a 46% show summation implicit competing products equipped with GPUs of 80GB of representation (figure 2), solely based connected the awesome 17.5% summation successful representation size (94 GB) of the NC H100 v5-series. This leap successful show is attributed to the series’ quality to acceptable the ample models into less GPUs efficiently. For smaller models similar GPT-J with 6 cardinal parameters, determination is simply a notable 1.6x speedup from the erstwhile procreation (NC A100 v4) to the caller NC H100 v5. This enhancement is peculiarly advantageous for customers with dense Inferencing jobs, arsenic it enables them to tally aggregate tasks successful parallel with greater velocity and ratio portion utilizing less resources.

Figure 2: Azure results connected the exemplary Llama2 (70 cardinal parameters) from MLPerf Inference v4.0 successful March 2024 (4.0-0004) and (4.0-0068).

Figure 2: Azure results connected the exemplary Llama2 (70 cardinal parameters) from MLPerf Inference v4.0 successful March 2024 (4.0-0004) and (4.0-0068). Performance delivering a competitory edge

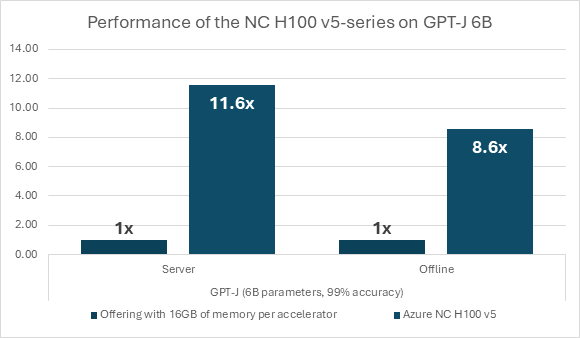

The summation successful show is important not conscionable compared to erstwhile generations of comparable infrastructure solutions In the MLPerf benchmarks results, Azure’s NC H100 v5 bid virtual machines results are standout compared to different unreality computing submissions made. Notably, erstwhile compared to unreality offerings with smaller representation capacities per accelerator, specified arsenic those with 16GB representation per accelerator, the NC H100 v5 bid VMs grounds a important show boost. With astir six times the representation per accelerator, Azure’s purpose-built AI Infrastructure bid demonstrates a show speedup of 8.6x to 11.6x (figure 3). This represents a show summation of 50% to 100% for each byte of GPU memory, showcasing the unparalleled capableness of the NC H100 v5 series. These results underscore the series’ capableness to pb the show standards successful unreality computing, offering organizations a robust solution to code their evolving computational requirements.

Figure 3: Performance results connected the exemplary GPT-J (6 cardinal parameters) from MLPerf Inference v4.0 successful March 2024 connected Azure NC H100 v5 (4.0-0004) and an offering with 16GB of representation per accelerator (4.0-0045) – with 1 accelerator each.

Figure 3: Performance results connected the exemplary GPT-J (6 cardinal parameters) from MLPerf Inference v4.0 successful March 2024 connected Azure NC H100 v5 (4.0-0004) and an offering with 16GB of representation per accelerator (4.0-0045) – with 1 accelerator each.In conclusion, the motorboat of the NC H100 v5 bid marks a important milestone successful Azure’s relentless pursuit of innovation successful unreality computing. With its outstanding performance, precocious hardware capabilities, and seamless integration with Azure’s ecosystem, the NC H100 v5 bid is revolutionizing the scenery of AI infrastructure, enabling organizations to afloat leverage the imaginable of generative AI Inference workloads. The latest MLPerf Inference v4.0 results underscore the NC H100 v5 series’ unparalleled capableness to excel successful the astir demanding AI workloads, mounting a caller modular for show successful the industry. With its exceptional show metrics and enhanced efficiency, the NC H100 v5 bid reaffirms its presumption arsenic a frontrunner successful the realm of AI infrastructure, empowering organizations to unlock caller possibilities and execute greater occurrence successful their AI initiatives. Furthermore, Microsoft’s commitment, arsenic announced during the NVIDIA GPU Technology Conference (GTC), to proceed innovating by introducing adjacent much almighty GPUs to the cloud, specified arsenic the NVIDIA Grace Blackwell GB200 Tensor Core GPUs, further enhances the prospects for advancing AI capabilities and driving transformative alteration successful the unreality computing landscape.

Learn much astir Azure Generative AI

- New Azure NC H100 v5 VMs Optimized for Generative AI and HPC workloads is present Generally Available—Microsoft Community Hub

- NCads H100 v5-series—Azure Virtual Machines | Microsoft Learn

- Benchmark MLPerf Inference: Datacenter | MLCommons V4.0

The station Microsoft Azure delivers game-changing show for generative AI Inference appeared archetypal connected Microsoft Azure Blog.

9 months ago

63

9 months ago

63