At NVIDIA GTC, Microsoft and NVIDIA are announcing caller offerings crossed a breadth of solution areas from starring AI infrastructure to caller level integrations, and manufacture breakthroughs. Today’s news expands our long-standing collaboration, which has paved the mode for revolutionary AI innovations that customers are present bringing to fruition.

Microsoft and NVIDIA collaborate connected Grace Blackwell 200 Superchip for next-generation AI models

Microsoft and NVIDIA are bringing the powerfulness of the NVIDIA Grace Blackwell 200 (GB200) Superchip to Microsoft Azure. The GB200 is simply a caller processor designed specifically for large-scale generative AI workloads, information processing, and precocious show workloads, featuring up to a monolithic 16 TB/s of representation bandwidth and up to an estimated 45 times the inference connected trillion parameter models comparative to the erstwhile Hopper procreation of servers.

Microsoft has worked intimately with NVIDIA to guarantee their GPUs, including the GB200, tin grip the latest ample connection models (LLMs) trained connected Azure AI infrastructure. These models necessitate tremendous amounts of information and compute to bid and run, and the GB200 volition alteration Microsoft to assistance customers standard these resources to caller levels of show and accuracy.

Microsoft volition besides deploy an end-to-end AI compute cloth with the precocious announced NVIDIA Quantum-X800 InfiniBand networking platform. By taking vantage of its in-network computing capabilities with SHARPv4, and its added enactment for FP8 for leading-edge AI techniques, NVIDIA Quantum-X800 extends the GB200’s parallel computing tasks into monolithic GPU scale.

Azure volition beryllium 1 of the archetypal unreality platforms to present connected GB200-based instances

Microsoft has committed to bringing GB200-based instances to Azure to enactment customers and Microsoft’s AI services. The caller Azure instances-based connected the latest GB200 and NVIDIA Quantum-X800 InfiniBand networking volition assistance accelerate the procreation of frontier and foundational models for earthy connection processing, machine vision, code recognition, and more. Azure customers volition beryllium capable to usage GB200 Superchip to make and deploy state-of-the-art AI solutions that tin grip monolithic amounts of information and complexity, portion accelerating clip to market.

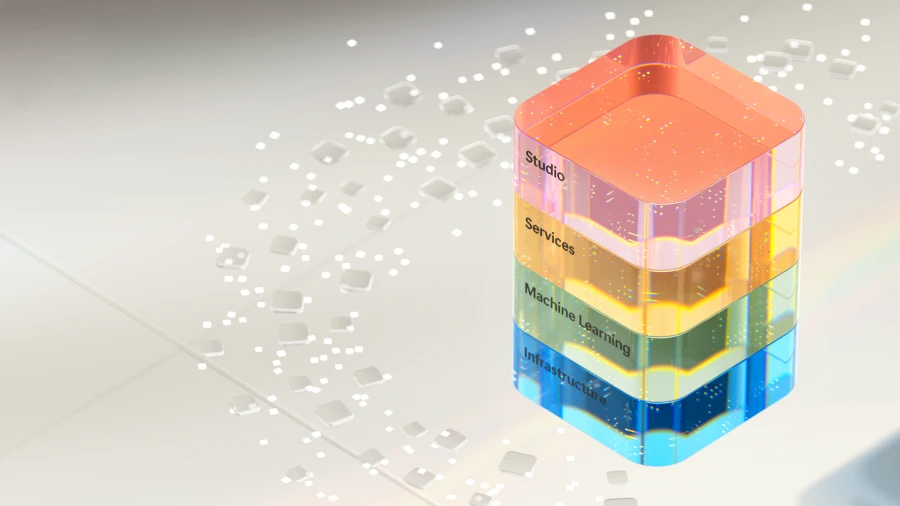

Azure besides offers a scope of services to assistance customers optimize their AI workloads, specified arsenic Microsoft Azure CycleCloud, Azure Machine Learning, Microsoft Azure AI Studio, Microsoft Azure Synapse Analytics, and Microsoft Azure Arc. These services supply customers with an end-to-end AI level that tin grip information ingestion, processing, training, inference, and deployment crossed hybrid and multi-cloud environments.

Delivering connected the committedness of AI to customers worldwide

With a almighty instauration of Azure AI infrastructure that uses the latest NVIDIA GPUs, Microsoft is infusing AI crossed each furniture of the exertion stack, helping customers thrust caller benefits and productivity gains. Now, with much than 53,000 Azure AI customers, Microsoft provides entree to the champion enactment of instauration and open-source models, including some LLMs and tiny connection models (SLMs), each integrated profoundly with infrastructure information and tools connected Azure.

The recently announced concern with Mistral AI is besides a large illustration of however Microsoft is enabling starring AI innovators with entree to Azure’s cutting-edge AI infrastructure, to accelerate the improvement and deployment of next-generation LLMs. Azure’s increasing AI exemplary catalogue offers, much than 1,600 models, letting customers take from the latest LLMs and SLMs, including OpenAI, Mistral AI, Meta, Hugging Face, Deci AI, NVIDIA, and Microsoft Research. Azure customers tin take the champion exemplary for their usage case.

“We are thrilled to embark connected this concern with Microsoft. With Azure’s cutting-edge AI infrastructure, we are reaching a caller milestone successful our enlargement propelling our innovative probe and applicable applications to caller customers everywhere. Together, we are committed to driving impactful advancement successful the AI manufacture and delivering unparalleled worth to our customers and partners globally.”

Arthur Mensch, Chief Executive Officer, Mistral AIGeneral availability of Azure NC H100 v5 VM series, optimized for generative inferencing and high-performance computing

Microsoft besides announced the wide availability of Azure NC H100 v5 VM series, designed for mid-range training, inferencing, and precocious show compute (HPC) simulations; it offers precocious show and efficiency.

As generative AI applications grow astatine unthinkable speed, the cardinal connection models that empower them volition grow besides to see both SLMs and LLMs. In addition, artificial constrictive quality (ANI) models will proceed to evolve, focused connected much precise predictions alternatively than instauration of caller information to proceed to heighten its usage cases. Their applications see tasks specified as image classification, object detection, and broader earthy connection processing.

Using the robust capabilities and scalability of Azure, we offer computational tools that empower organizations of each sizes, careless of their resources. Azure NC H100 v5 VMs is yet different computational instrumentality made mostly disposable contiguous that volition bash conscionable that.

The Azure NC H100 v5 VM bid is based connected the NVIDIA H100 NVL platform, which offers 2 classes of VMs, ranging from 1 to 2 NVIDIA H100 94GB PCIe Tensor Core GPUs connected by NVLink with 600 GB/s of bandwidth. This VM bid supports PCIe Gen5, which provides the highest connection speeds (128GB/s bi-directional) betwixt the big processor and the GPU. This reduces the latency and overhead of information transportation and enables faster and much scalable AI and HPC applications.

The VM bid besides supports NVIDIA multi-instance GPU (MIG) technology, enabling customers to partition each GPU into up to 7 instances, providing flexibility and scalability for divers AI workloads. This VM bid offers up to 80 Gbps web bandwidth and up to 8 TB of section NVMe retention connected afloat node VM sizes.

These VMs are perfect for grooming models, moving inferencing tasks, and processing cutting-edge applications. Learn much astir the Azure NC H100 v5-series.

“Snorkel AI is arrogant to spouse with Microsoft to assistance organizations rapidly and cost-effectively harness the powerfulness of information and AI. Azure AI infrastructure delivers the show our astir demanding ML workloads necessitate positive simplified deployment and streamlined absorption features our researchers love. With the caller Azure NC H100 v5 VM bid powered by NVIDIA H100 NVL GPUs, we are excited to proceed to tin accelerate iterative information improvement for enterprises and OSS users alike.”

Paroma Varma, Co-Founder and Head of Research, Snorkel AIMicrosoft and NVIDIA present breakthroughs for healthcare and beingness sciences

Microsoft is expanding its collaboration with NVIDIA to assistance transform the healthcare and beingness sciences manufacture done the integration of cloud, AI, and supercomputing.

By utilizing the planetary scale, security, and precocious computing capabilities of Azure and Azure AI, on with NVIDIA’S DGX Cloud and NVIDIA Clara suite, healthcare providers, pharmaceutical and biotechnology companies, and aesculapian instrumentality developers tin present rapidly accelerate innovation crossed the full objective probe to attraction transportation worth concatenation for the payment of patients worldwide. Learn more.

New Omniverse APIs alteration customers crossed industries to embed monolithic graphics and visualization capabilities

Today, NVIDIA’s Omniverse level for processing 3D applications volition present beryllium disposable arsenic a acceptable of APIs moving connected Microsoft Azure, enabling customers to embed precocious graphics and visualization capabilities into existing bundle applications from Microsoft and spouse ISVs.

Built connected OpenUSD, a cosmopolitan information interchange, NVIDIA Omniverse Cloud APIs connected Azure bash the integration enactment for customers, giving them seamless physically based rendering capabilities connected the beforehand end. Demonstrating the worth of these APIs, Microsoft and NVIDIA person been moving with Rockwell Automation and Hexagon to amusement however the carnal and integer worlds tin beryllium combined for accrued productivity and efficiency. Learn more.

Microsoft and NVIDIA envision deeper integration of NVIDIA DGX Cloud with Microsoft Fabric

The 2 companies are besides collaborating to bring NVIDIA DGX Cloud compute and Microsoft Fabric unneurotic to powerfulness customers’ astir demanding information workloads. This means that NVIDIA’s workload-specific optimized runtimes, LLMs, and instrumentality learning volition enactment seamlessly with Fabric.

NVIDIA DGX Cloud and Fabric integration see extending the capabilities of Fabric by bringing successful NVIDIA DGX Cloud’s ample connection exemplary customization to code data-intensive usage cases similar integer twins and upwind forecasting with Fabric OneLake arsenic the underlying information storage. The integration volition besides supply DGX Cloud arsenic an enactment for customers to accelerate their Fabric information subject and information engineering workloads.

Accelerating innovation successful the epoch of AI

For years, Microsoft and NVIDIA person collaborated from hardware to systems to VMs, to physique caller and innovative AI-enabled solutions to code analyzable challenges successful the cloud. Microsoft volition proceed to grow and heighten its planetary infrastructure with the astir cutting-edge exertion successful each furniture of the stack, delivering improved show and scalability for unreality and AI workloads and empowering customers to execute much crossed industries and domains.

Join Microsoft astatine NVIDIA CTA AI Conference, March 18 done 21, astatine booth #1108 and attend a session to larn much astir solutions connected Azure and NVIDIA.

Learn much astir Microsoft AI solutions

The station Microsoft and NVIDIA concern continues to present connected the committedness of AI appeared archetypal connected Microsoft Azure Blog.

9 months ago

47

9 months ago

47