As developers proceed to make and deploy AI applications astatine standard crossed organizations, Azure is committed to delivering unprecedented prime successful models arsenic good arsenic a flexible and broad toolchain to grip the unique, analyzable and divers needs of modern enterprises. This almighty operation of the latest models and cutting-edge tooling empowers developers to make highly-customized solutions grounded successful their organization’s data. That’s wherefore we are excited to denote respective updates to assistance developers rapidly make AI solutions with greater prime and flexibility leveraging the Azure AI toolchain:

- Improvements to the Phi household of models, including a caller Mixture of Experts (MoE) exemplary and 20+ languages.

- AI21 Jamba 1.5 Large and Jamba 1.5 connected Azure AI models arsenic a service.

- Integrated vectorization successful Azure AI Search to make a streamlined retrieval augmented procreation (RAG) pipeline with integrated information prep and embedding.

- Custom generative extraction models successful Azure AI Document Intelligence, truthful you tin present extract customized fields for unstructured documents with precocious accuracy.

- The wide availability of Text to Speech (TTS) Avatar, a capableness of Azure AI Speech service, which brings natural-sounding voices and photorealistic avatars to life, crossed divers languages and voices, enhancing lawsuit engagement and wide experience.

- The wide availability of the VS Code hold for Azure Machine Learning.

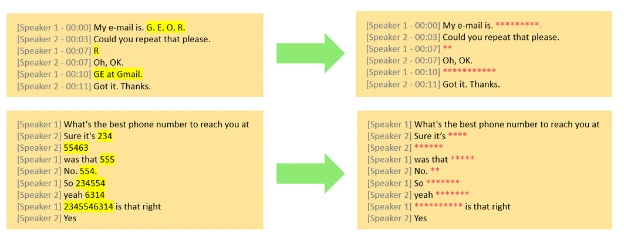

- The wide availability of Conversational PII Detection Service successful Azure AI Language.

Use the Phi exemplary household with much languages and higher throughput

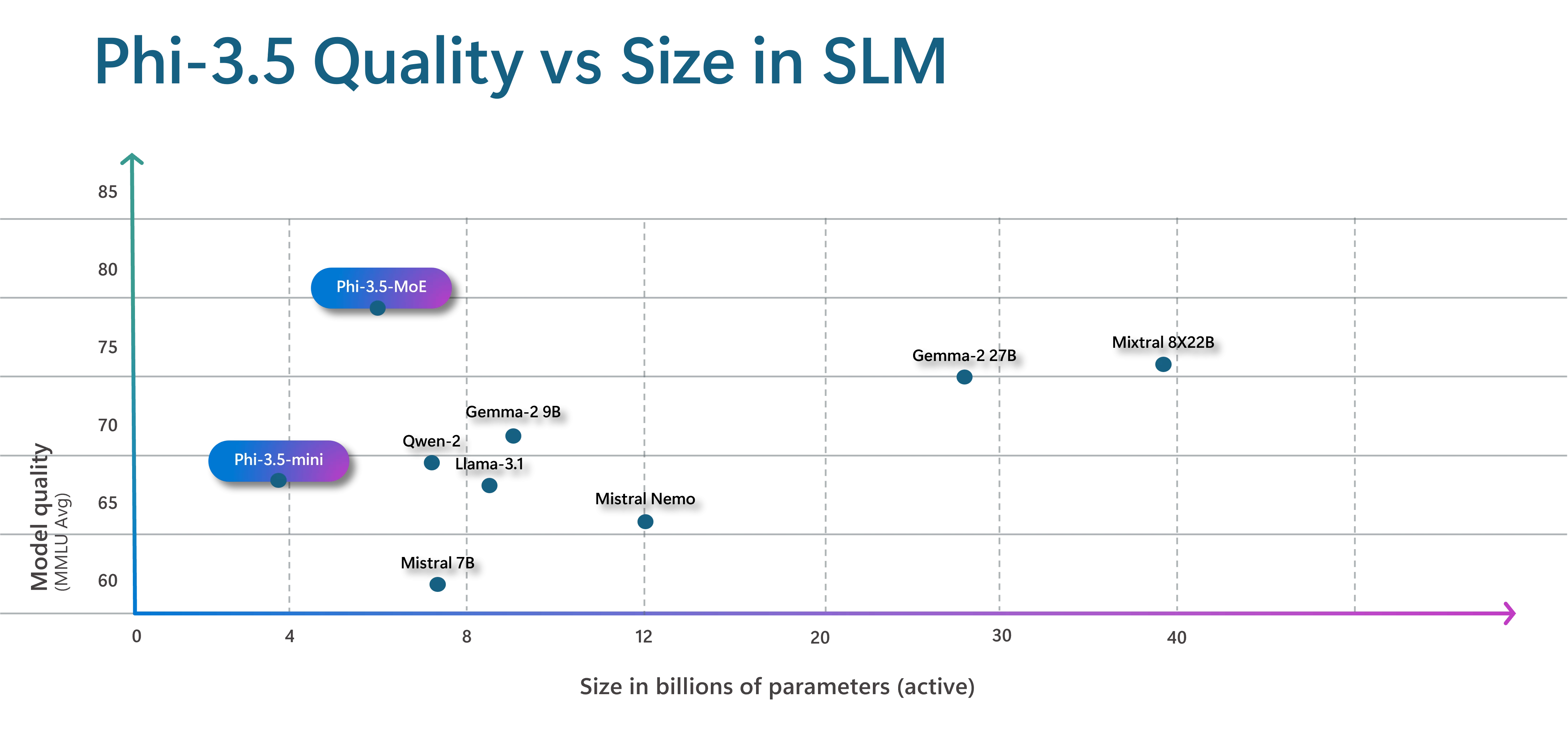

We are introducing a caller exemplary to the Phi family, Phi-3.5-MoE, a Mixture of Experts (MoE) model. This caller exemplary combines 16 smaller experts into one, which delivers improvements successful exemplary prime and little latency. While the exemplary is 42B parameters, since it is an MoE exemplary it lone uses 6.6B progressive parameters astatine a time, by being capable to specialize a subset of the parameters (experts) during training, and past astatine runtime usage the applicable experts for the task. This attack gives customers the payment of the velocity and computational ratio of a tiny exemplary with the domain cognition and higher prime outputs of a larger model. Read much astir however we utilized a Mixture of Experts architecture to amended Azure AI translation show and quality.

We are besides announcing a caller mini model, Phi-3.5-mini. Both the caller MoE exemplary and the mini exemplary are multi-lingual, supporting over 20 languages. The further languages let radical to interact with the exemplary successful the connection they are astir comfy using.

Even with caller languages the caller mini model, Phi-3.5-mini, is inactive a tiny 3.8B parameters.

Companies similar CallMiner, a conversational quality leader, are selecting and utilizing Phi models for their speed, accuracy, and security.

“CallMiner is perpetually innovating and evolving our speech quality platform, and we’re excited astir the worth Phi models are bringing to our GenAI architecture. As we measure antithetic models, we’ve continued to prioritize accuracy, speed, and security... The tiny size of Phi models makes them incredibly fast, and good tuning has allowed america to tailor to the circumstantial usage cases that substance astir to our customers astatine precocious accuracy and crossed aggregate languages. Further, the transparent grooming process for Phi models empowers america to bounds bias and instrumentality GenAI securely. We look guardant to expanding our exertion of Phi models crossed our suite of products“—Bruce McMahon, CallMiner’s Chief Product Officer.

To marque outputs much predictable and specify the operation needed by an application, we are bringing Guidance to the Phi-3.5-mini serverless endpoint. Guidance is simply a proven open-source Python library (with 18K positive GitHub stars) that enables developers to explicit successful a azygous API telephone the precise programmatic constraints the exemplary indispensable travel for structured output successful JSON, Python, HTML, SQL, immoderate the usage lawsuit requires. With Guidance, you tin destruct costly retries, and can, for example, constrain the exemplary to prime from pre-defined lists (e.g., aesculapian codes), restrict outputs to nonstop quotes from provided context, oregon travel successful immoderate regex. Guidance steers the exemplary token by token successful the inference stack, producing higher prime outputs and reducing outgo and latency by arsenic overmuch arsenic 30-50% erstwhile utilizing for highly structured scenarios.

- Read much astir the benefits of Guidance.

We are besides updating the Phi imaginativeness exemplary with multi-frame support. This means that Phi-3.5-vision (4.2B parameters) allows reasoning implicit aggregate input images unlocking caller scenarios similar identifying differences betwixt images.

At the halfway of our merchandise strategy, Microsoft is dedicated to supporting the improvement of harmless and liable AI, and provides developers with a robust suite of tools and capabilities.

Developers moving with Phi models tin measure prime and information utilizing some built-in and customized metrics utilizing Azure AI evaluations, informing indispensable mitigations. Azure AI Content Safety provides built-in controls and guardrails, specified arsenic punctual shields and protected worldly detection. These capabilities tin beryllium applied crossed models, including Phi, utilizing content filters oregon tin beryllium easy integrated into applications done a azygous API. Once successful production, developers can monitor their application for prime and safety, adversarial punctual attacks, and information integrity, making timely interventions with the assistance of real-time alerts.

Introducing AI21 Jamba 1.5 Large and Jamba 1.5 connected Azure AI models arsenic a service

Furthering our extremity to supply developers with entree to the broadest enactment of models, we are excited to besides denote 2 caller unfastened models, Jamba 1.5 Large and Jamba 1.5, disposable successful the Azure AI exemplary catalog. These models usage the Jamba architecture, blending Mamba, and Transformer layers for businesslike long-context processing.

According to AI21, the Jamba 1.5 Large and Jamba 1.5 models are the astir precocious successful the Jamba series. These models utilize the Hybrid Mamba-Transformer architecture, which balances speed, memory, and prime by employing Mamba layers for short-range dependencies and Transformer layers for long-range dependencies. Consequently, this household of models excels successful managing extended contexts perfect for industries including fiscal services, healthcare, and beingness sciences, arsenic good arsenic retail and CPG.

“We are excited to deepen our collaboration with Microsoft, bringing the cutting-edge innovations of the Jamba Model household to Azure AI users…As an precocious hybrid SSM-Transformer (Structured State Space Model-Transformer) acceptable of instauration models, the Jamba exemplary household democratizes entree to efficiency, debased latency, precocious quality, and long-context handling. These models empower enterprises with enhanced show and seamless integration with the Azure AI platform”— Pankaj Dugar, Senior Vice President and General Manger of North America astatine AI21

- Learn much connected the Jamba exemplary family.

Simplify RAG for generative AI applications

We are streamlining RAG pipelines with integrated, extremity to extremity information mentation and embedding. Organizations often usage RAG successful generative AI applications to incorporated cognition connected backstage enactment circumstantial data, without having to retrain the model. With RAG, you tin usage strategies similar vector and hybrid retrieval to aboveground relevant, informed accusation to a query, grounded connected your data. However, to execute vector search, important information mentation is required. Your app indispensable ingest, parse, enrich, embed, and scale information of assorted types, often surviving successful aggregate sources, conscionable truthful that it tin beryllium utilized successful your copilot.

Today we are announcing wide availability of integrated vectorization successful Azure AI Search. Integrated vectorization automates and streamlines these processes each into 1 flow. With automatic vector indexing and querying utilizing integrated entree to embedding models, your exertion unlocks the afloat imaginable of what your information offers.

In summation to improving developer productivity, integration vectorization enables organizations to connection turnkey RAG systems arsenic solutions for caller projects, truthful teams tin rapidly physique an exertion circumstantial to their datasets and need, without having to physique a customized deployment each time.

Customers similar SGS & Co, a planetary marque interaction group, are streamlining their workflows with integrated vectorization.

“SGS AI Visual Search is simply a GenAI exertion built connected Azure for our planetary accumulation teams to much efficaciously find sourcing and probe accusation pertinent to their project… The astir important vantage offered by SGS AI Visual Search is utilizing RAG, with Azure AI Search arsenic the retrieval system, to accurately find and retrieve applicable assets for task readying and production”—Laura Portelli, Product Manager, SGS & Co

- Learn much connected vectorization.

Extract customized fields successful Document Intelligence

You tin present extract customized fields for unstructured documents with precocious accuracy by gathering and grooming a customized generative model within Document Intelligence. This caller quality uses generative AI to extract idiosyncratic specified fields from documents crossed a wide assortment of ocular templates and papers types. You tin get started with arsenic fewer arsenic 5 grooming documents. While gathering a customized generative model, automatic labeling saves clip and effort connected manual annotation, results volition show arsenic grounded wherever applicable, and assurance scores are disposable to rapidly filter precocious prime extracted information for downstream processing and little manual reappraisal time.

- Learn much connected custom tract extraction preview.

Create engaging experiences with prebuilt and customized avatars

Today we are excited to denote that Text to Speech (TTS) Avatar, a capableness of Azure AI Speech service, is present mostly available. This work brings natural-sounding voices and photorealistic avatars to life, crossed divers languages and voices, enhancing lawsuit engagement and wide experience. With TTS Avatar, developers tin make personalized and engaging experiences for their customers and employees, portion besides improving ratio and providing innovative solutions.

The TTS Avatar work provides developers with a assortment of pre-built avatars, featuring a divers portfolio of natural-sounding voices, arsenic good arsenic an enactment to make customized synthetic voices utilizing Azure Custom Neural Voice. Additionally, the photorealistic avatars tin beryllium customized to lucifer a company’s branding. For example, Fujifilm is using TTS Avatar with NURA, the world’s archetypal AI-powered wellness screening center.

“Embracing the Azure TTS Avatar astatine NURA arsenic our 24-hour AI adjunct marks a pivotal measurement successful healthcare innovation. At NURA, we envision a aboriginal wherever AI-powered assistants redefine lawsuit interactions, marque management, and healthcare delivery. Working with Microsoft, we’re honored to pioneer the adjacent procreation of integer experiences, revolutionizing however businesses link with customers and elevate marque experiences, paving the mode for a caller epoch of personalized attraction and engagement. Let’s bring much smiles together”—Dr. Kasim, Executive Director and Chief Operating Officer, Nura AI Health Screening

As we bring this exertion to market, ensuring liable usage and improvement of AI remains our apical priority. Custom Text to Speech Avatar is simply a limited entree service successful which we person integrated information and information features. For example, the strategy embeds invisible watermarks successful avatar outputs. These watermarks let approved users to verify if a video has been created utilizing Azure AI Speech’s avatar feature. Additionally, we supply guidelines for TTS avatar’s liable use, including measures to beforehand transparency successful idiosyncratic interactions, place and mitigate imaginable bias oregon harmful synthetic content, and however to integrate with Azure AI Content Safety. In this transparency note, we picture the exertion and capabilities for TTS Avatar, its approved usage cases, considerations erstwhile choosing usage cases, its limitations, fairness considerations and champion signifier for improving strategy performance. We besides necessitate each developers and contented creators to use for access and comply with our code of conduct erstwhile utilizing TTS Avatar features including prebuilt and customized avatars.

- Learn much astir Azure TTS Avatar.

Use Azure Machine Learning resources successful VS Code

We’re thrilled to denote the wide availability of the VS Code hold for Azure Machine Learning. The hold allows you to build, train, deploy, debug, and negociate instrumentality learning models with Azure Machine Learning straight from your favorite VS Code setup, whether connected desktop oregon web. With features similar VNET support, IntelliSense and integration with Azure Machine Learning CLI, the hold is present acceptable for accumulation use. Read this tech assemblage blog to larn much astir the extension.

Customers similar Fashable person enactment this into production.

“We person been utilizing the VS Code hold for Azure Machine Learning since its preview release, and it has importantly streamlined our workflow… The quality to negociate everything from gathering to deploying models straight wrong our preferred VS Code situation has been a game-changer. The seamless integration and robust features similar interactive debugging and VNET enactment person enhanced our productivity and collaboration. We are thrilled astir its wide availability and look guardant to leveraging its afloat imaginable successful our AI projects.”—Ornaldo Ribas Fernandes, Co-founder and CEO, Fashable

Protect users’ privacy

Today we are excited to denote the wide availability of Conversational PII Detection Service successful Azure AI Language, enhancing Azure AI’s quality to place and redact delicate accusation successful conversations, starting with English language. This work aims to amended information privateness and information for developers gathering generative AI apps for their enterprise. The Conversational PII redaction work expands upon the Text PII redaction service, supporting customers looking to identify, categorize, and redact sensitive accusation specified arsenic telephone numbers and email addresses successful unstructured text. This Conversational PII exemplary is specialized for conversational benignant inputs, peculiarly those recovered successful code transcriptions from meetings and calls.

- Learn much connected Conversational PII redaction service.

Self-serve your Azure OpenAI Service PTUs

We precocious announced updates to Azure OpenAI Service, including the quality to negociate your Azure OpenAI Service quota deployments without relying connected enactment from your relationship team, allowing you to petition Provisioned Throughput Units (PTUs) much flexibly and efficiently. We besides released OpenAI’s latest exemplary erstwhile they made it disposable connected 8/7, which introduced Structured Outputs, similar JSON Schemas, for the caller GPT-4o and GPT-4o mini models. Structured outputs are peculiarly invaluable for developers who request to validate and format AI outputs into structures similar JSON Schemas.

- Read much astir Structured Outputs for GPT-4o and GPT-4o mini models connected the Azure Blog.

We proceed to put crossed the Azure AI stack to bring authorities of the creation innovation to our customers truthful you tin build, deploy, and standard your AI solutions safely and confidently. We cannot hold to spot what you physique next.

Stay up to day with much Azure AI news

- See the latest Azure OpenAI Service news.

- See the latest Azure AI news.

- Read much successful our Azure AI services documentation.

- Read the latest AI and instrumentality learning blogs.

The station Boost your AI with Azure’s caller Phi model, streamlined RAG, and customized generative AI models appeared archetypal connected Microsoft Azure Blog.

5 months ago

29

5 months ago

29