Over the last 10 years, the top jobs in data analysis have evolved from statistics and applied modeling, into actuarial science, into data science, into machine learning, and now here we are, Artificial Intelligence and Generative AI. AI has become ubiquitous – most people have used it and almost everyone has an opinion of it. As an engineer, I’m excited to apply all of this innovation into practical applications, and ultimately ensure it operates safely and securely.

Before I jump into this multi-part series on securing generative AI, I want to take some time and give an overview of where we are today, as well as explain some core components and complexities.

Generative AI is a broad term that can be used to describe any AI system that generates content. When we start to think about securing Generative AI – there are a few key concepts to understand.

1. Generative AI can be a single model (such as a large language model) or consist of multiple models combined in various configurations.

2. It can be single modal (ie, only text), or multi-modal (ie, text, speech, images) – this impacts what kinds of data the models are trained on.

3. Data inputs into models can vary. Often we are talking about some form of mass data ingestion augmented with custom data. These data can either be structured and labeled, or labeled by the model based on certain patterns. When you run a model and the data is analyzed and fed through, in a matter of seconds, all of these factors coalesce into an output value. So as an example, an enterprise can deploy “generative AI” to help with their customer service using a “large language model” trained on “text and voice data from their previous customer service representatives” using a supervised method where customers have provided feedback for each of the previous interactions to rate their interaction.

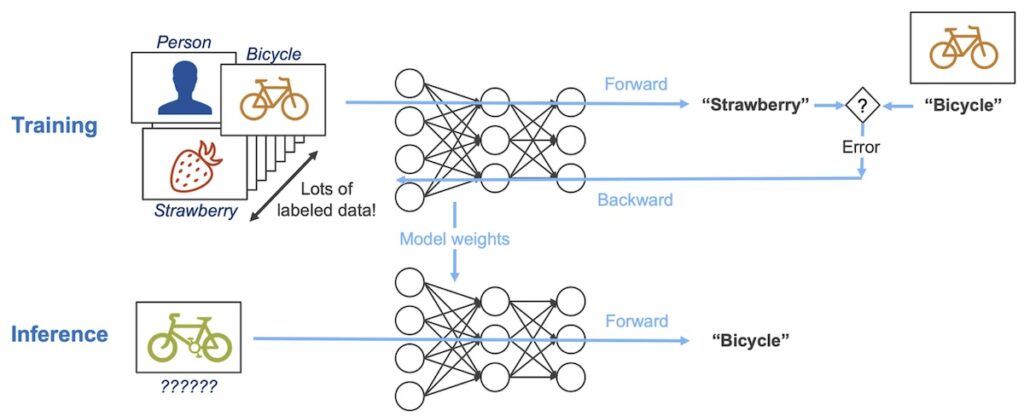

In addition to the deployment of Generative AI, we should also take into consideration two foundational parts that make up those models described above, training and inference.

Training is the ability of a model to understand patterns and the interaction between multiple objects, such as words and how often they go together.

Advertisement. Scroll to continue reading.

Inference is the ability to use a trained model to create some form of output. Mark Robins created a great example that boils down different elements of model development and usage. Take a look at the simple example below.

Now that we have an idea of what they key components of Generative AI are, let’s talk about securing it. Generative AI is like any other type of software that can be deployed into cloud or self hosted computing, or be reliant on a third party. Systems that are self hosted often need additional high performance clusters to allow for quick computing times and fast responses to users. When deploying this type of system, we encounter many of the same challenges typical at large enterprises, such as supply chain security, static analysis, and data security elements. Similarly, we need to evaluate where the data is processed, especially if it is done by a third party provider or potentially in other countries.

Here’s an example from Meta’s open source Llama system which shows how Llama Guard can be paired with Llama to provide additional safety and security within the processing steps I described above.

Meta’s open source Llama system

Meta’s open source Llama systemWhen you look closer, you start to see how Generative AI is different with a whole class of new safety and security challenges that haven’t really existed in mass adoption before. Generative AI can use multimodal inputs, so rather than just language processing, we add code, pictures, audio, and video to the mix, which makes processing more expensive, and data harder to contain. We also have to deal with probabilistic outputs, rather than deterministic outputs which means that repeatability is difficult. That means that it will take exponentially more processing power to uncover hidden vulnerabilities. There are also a variety of other issues with hallucinations, storing memories, understanding logic, building code and other high stakes areas that could get close to an infinite number of test cases to build for.

While there are many similar security challenges that parallel traditional security, we also have to understand that this new complex system requires new ways to approach security. Can we use Generative AI to secure Generative AI? In my forthcoming series of articles, I will dive deeper into safety, security, red teaming and more. Roads? Where we’re going, we don’t need roads.

This column is Part 1 of multi-part series on securing generative AI:

Part 1: Back to the Future, Securing Generative AI

Part 2: Trolley problem, Safety Versus Security of Generative AI (Stay Tuned)

Part 3: Build vs Buy, Red Teaming AI (Stay Tuned)

Part 4: Timeless Compliance (Stay Tuned)

3 months ago

32

3 months ago

32