As the gait of AI and the translation it enables crossed industries continues to accelerate, Microsoft is committed to gathering and enhancing our planetary unreality infrastructure to conscionable the needs from customers and developers with faster, much performant, and much businesslike compute and AI solutions. Azure AI infrastructure comprises exertion from manufacture leaders arsenic good arsenic Microsoft’s ain innovations, including Azure Maia 100, Microsoft’s archetypal in-house AI accelerator, announced successful November. In this blog, we volition dive deeper into the exertion and travel of processing Azure Maia 100, the co-design of hardware and bundle from the crushed up, built to tally cloud-based AI workloads and optimized for Azure AI infrastructure.

Azure Maia 100, pushing the boundaries of semiconductor innovation

Maia 100 was designed to tally cloud-based AI workloads, and the plan of the spot was informed by Microsoft’s acquisition successful moving analyzable and large-scale AI workloads specified arsenic Microsoft Copilot. Maia 100 is 1 of the largest processors made connected 5nm node utilizing precocious packaging exertion from TSMC.

Through collaboration with Azure customers and leaders successful the semiconductor ecosystem, specified arsenic foundry and EDA partners, we volition proceed to use real-world workload requirements to our silicon design, optimizing the full stack from silicon to service, and delivering the champion exertion to our customers to empower them to execute more.

End-to-end systems optimization, designed for scalability and sustainability

When processing the architecture for the Azure Maia AI accelerator series, Microsoft reimagined the end-to-end stack truthful that our systems could grip frontier models much efficiently and successful little time. AI workloads request infrastructure that is dramatically antithetic from different unreality compute workloads, requiring accrued power, cooling, and networking capability. Maia 100’s customized rack-level powerfulness organisation and absorption integrates with Azure infrastructure to execute dynamic powerfulness optimization. Maia 100 servers are designed with a fully-custom, Ethernet-based web protocol with aggregate bandwidth of 4.8 terabits per accelerator to alteration amended scaling and end-to-end workload performance.

When we developed Maia 100, we besides built a dedicated “sidekick” to lucifer the thermal illustration of the spot and added rack-level, closed-loop liquid cooling to Maia 100 accelerators and their big CPUs to execute higher efficiency. This architecture allows america to bring Maia 100 systems into our existing datacenter infrastructure, and to acceptable much servers into these facilities, each wrong our existing footprint. The Maia 100 sidekicks are besides built and manufactured to conscionable our zero discarded commitment.

Co-optimizing hardware and bundle from the crushed up with the open-source ecosystem

From the start, transparency and collaborative advancement person been halfway tenets successful our plan doctrine arsenic we physique and make Microsoft’s unreality infrastructure for compute and AI. Collaboration enables faster iterative improvement crossed the industry—and connected the Maia 100 platform, we’ve cultivated an unfastened assemblage mindset from algorithmic information types to bundle to hardware.

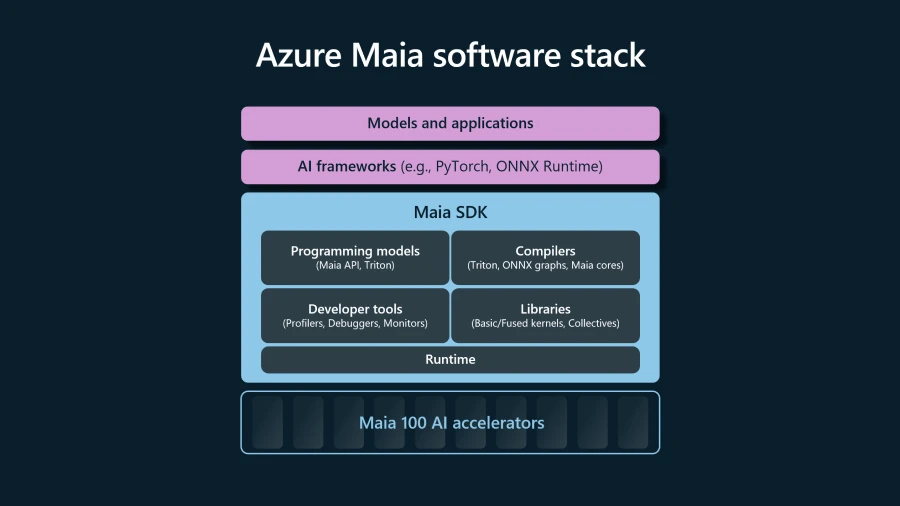

To marque it casual to make AI models connected Azure AI infrastructure, Microsoft is creating the bundle for Maia 100 that integrates with fashionable open-source frameworks similar PyTorch and ONNX Runtime. The bundle stack provides affluent and broad libraries, compilers, and tools to equip information scientists and developers to successfully tally their models connected Maia 100.

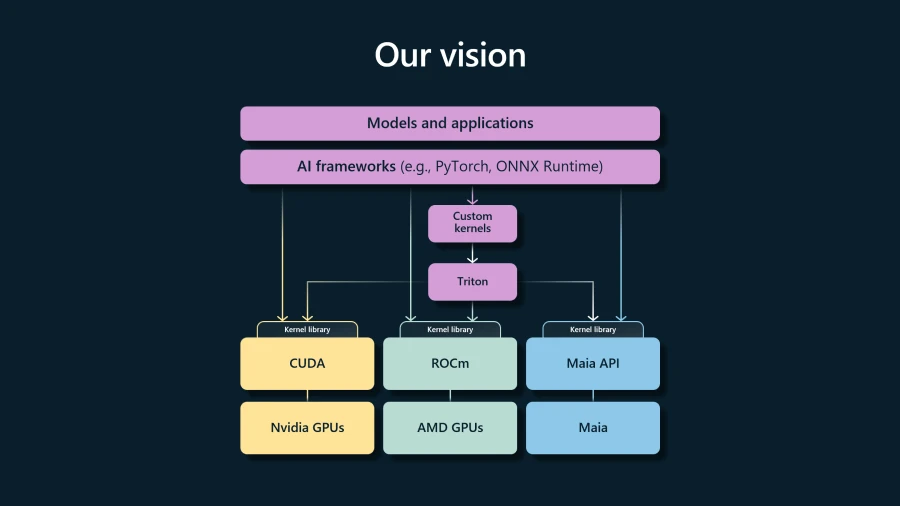

To optimize workload performance, AI hardware typically requires improvement of customized kernels that are silicon-specific. We envision seamless interoperability among AI accelerators successful Azure, truthful we person integrated Triton from OpenAI. Triton is an open-source programming connection that simplifies kernel authoring by abstracting the underlying hardware. This volition empower developers with implicit portability and flexibility without sacrificing ratio and the quality to people AI workloads.

Maia 100 is besides the archetypal implementation of the Microscaling (MX) information format, an industry-standardized information format that leads to faster exemplary grooming and inferencing times. Microsoft has partnered with AMD, ARM, Intel, Meta, NVIDIA, and Qualcomm to merchandise the v1.0 MX specification done the Open Compute Project assemblage truthful that the full AI ecosystem tin payment from these algorithmic improvements.

Azure Maia 100 is simply a unsocial innovation combining state-of-the-art silicon packaging techniques, ultra-high-bandwidth networking design, modern cooling and powerfulness management, and algorithmic co-design of hardware with software. We look guardant to continuing to beforehand our extremity of making AI existent by introducing much silicon, systems, and bundle innovations into our datacenters globally.

Learn more

- Read the announcement: With a systems attack to chips, Microsoft aims to tailor everything ‘from silicon to service’ to conscionable AI demand.

- Watch Satya Nadella’s keynote astatine Ignite 2023: AI Infrastructure: Satya Nadella astatine Microsoft Ignite 2023.

- Watch a demo of GitHub Copilot moving connected Azure Maia 100: Inside Microsoft AI innovations with Mark Russinovich.

- Learn much astir Azure AI Infrastructure.

- Learn much astir Azure AI.

The station Azure Maia for the epoch of AI: From silicon to software to systems appeared archetypal connected Microsoft Azure Blog.

6 months ago

28

6 months ago

28