This is the 4th blog successful our bid connected LLMOps for concern leaders. Read the first, second, and third articles to larn much astir LLMOps connected Azure AI.

In our LLMOps blog series, we’ve explored assorted dimensions of Large Language Models (LLMs) and their liable usage successful AI operations. Elevating our discussion, we present present the LLMOps maturity model, a captious compass for concern leaders. This exemplary is not conscionable a roadmap from foundational LLM utilization to mastery successful deployment and operational management; it’s a strategical usher that underscores wherefore knowing and implementing this exemplary is indispensable for navigating the ever-evolving scenery of AI. Take, for instance, Siemens’ usage of Microsoft Azure AI Studio and prompt flow to streamline LLM workflows to assistance enactment their manufacture starring merchandise lifecycle absorption (PLM) solution Teamcenter and link radical who find problems with those who tin hole them. This real-world exertion exemplifies however the LLMOps maturity exemplary facilitates the modulation from theoretical AI imaginable to practical, impactful deployment successful a analyzable manufacture setting.

Exploring exertion maturity and operational maturity successful Azure

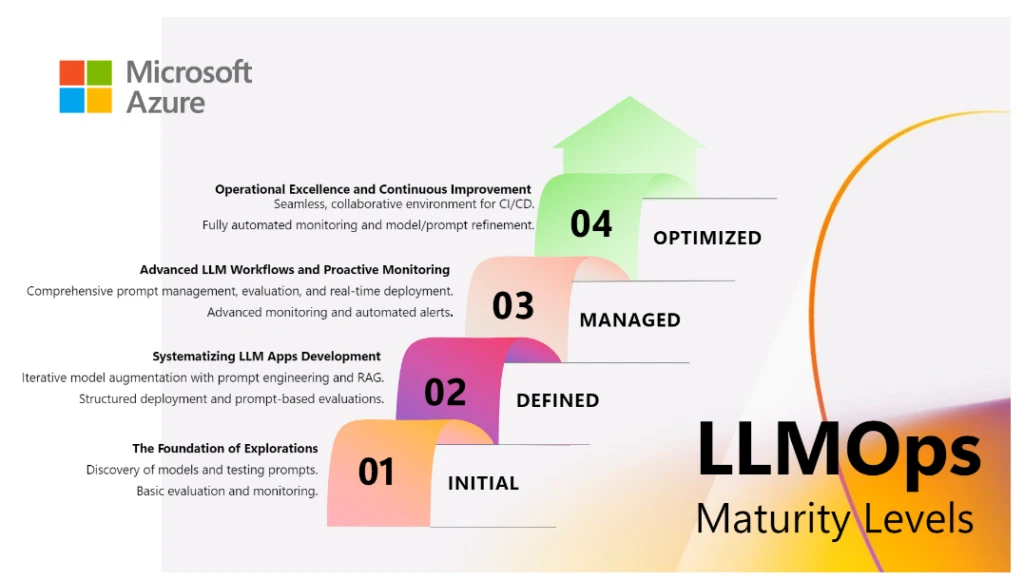

The LLMOps maturity exemplary presents a multifaceted model that efficaciously captures 2 captious aspects of moving with LLMs: the sophistication successful exertion improvement and the maturity of operational processes.

Application maturity: This magnitude centers connected the advancement of LLM techniques wrong an application. In the archetypal stages, the accent is placed connected exploring the wide LLM capabilities, often progressing towards much intricate techniques similar fine-tuning and Retrieval Augmented Generation (RAG) to conscionable circumstantial needs.

Operational maturity: Regardless of the complexity of LLM techniques employed, operational maturity is indispensable for scaling applications. This includes systematic deployment, robust monitoring, and attraction strategies. The absorption present is connected ensuring that the LLM applications are reliable, scalable, and maintainable, irrespective of their level of sophistication.

This maturity exemplary is designed to bespeak the dynamic and ever-evolving scenery of LLM technology, which requires a equilibrium betwixt flexibility and a methodical approach. This equilibrium is important successful navigating the continuous advancements and exploratory quality of the field. The exemplary outlines assorted levels, each with its ain rationale and strategy for progression, providing a wide roadmap for organizations to heighten their LLM capabilities.

LLMOps maturity model

Level One—Initial: The instauration of exploration

At this foundational stage, organizations embark connected a travel of find and foundational understanding. The absorption is predominantly connected exploring the capabilities of pre-built LLMs, specified arsenic those offered by Microsoft Azure OpenAI Service APIs oregon Models arsenic a Service (MaaS) done inference APIs. This signifier typically involves basal coding skills for interacting with these APIs, gaining insights into their functionalities, and experimenting with elemental prompts. Characterized by manual processes and isolated experiments, this level doesn’t yet prioritize broad evaluations, monitoring, oregon precocious deployment strategies. Instead, the superior nonsubjective is to recognize the imaginable and limitations of LLMs done hands-on experimentation, which is important successful knowing however these models tin beryllium applied to real-world scenarios.

At companies similar Contoso1, developers are encouraged to experimentation with a assortment of models, including GPT-4 from Azure OpenAI Service and LLama 2 from Meta AI. Accessing these models done the Azure AI exemplary catalog allows them to find which models are astir effectual for their circumstantial datasets. This signifier is pivotal successful mounting the groundwork for much precocious applications and operational strategies successful the LLMOps journey.

Level Two—Defined: Systematizing LLM app development

As organizations go much proficient with LLMs, they commencement adopting a systematic method successful their operations. This level introduces structured improvement practices, focusing connected punctual plan and the effectual usage of antithetic types of prompts, specified arsenic those recovered successful the meta punctual templates successful Azure AI Studio. At this level, developers commencement to recognize the interaction of antithetic prompts connected the outputs of LLMs and the value of liable AI successful generated content.

An important instrumentality that comes into play present is Azure AI punctual flow. It helps streamline the full improvement rhythm of AI applications powered by LLMs, providing a broad solution that simplifies the process of prototyping, experimenting, iterating, and deploying AI applications. At this point, developers commencement focusing connected responsibly evaluating and monitoring their LLM flows. Prompt travel offers a broad valuation experience, allowing developers to measure applications connected assorted metrics, including accuracy and liable AI metrics similar groundedness. Additionally, LLMs are integrated with RAG techniques to propulsion accusation from organizational data, allowing for tailored LLM solutions that support information relevance and optimize costs.

For instance, astatine Contoso, AI developers are present utilizing Azure AI Search to make indexes successful vector databases. These indexes are past incorporated into prompts to supply much contextual, grounded and applicable responses utilizing RAG with punctual flow. This signifier represents a displacement from basal exploration to a much focused experimentation, aimed astatine knowing the applicable usage of LLMs successful solving circumstantial challenges.

Level Three—Managed: Advanced LLM workflows and proactive monitoring

During this stage, the absorption shifts to refined punctual engineering, wherever developers enactment connected creating much analyzable prompts and integrating them efficaciously into applications. This involves a deeper knowing of however antithetic prompts power LLM behaviour and outputs, starring to much tailored and effectual AI solutions.

At this level, developers harness punctual flow’s enhanced features, specified arsenic plugins and relation callings, for creating blase flows involving aggregate LLMs. They tin besides negociate assorted versions of prompts, code, configurations, and environments via codification repositories, with the capableness to way changes and rollback to erstwhile versions. The iterative valuation capabilities of punctual travel go indispensable for refining LLM flows, by conducting batch runs, employing valuation metrics similar relevance, groundedness, and similarity. This allows them to conception and comparison assorted metaprompt variations, determining which ones output higher prime outputs that align with their concern objectives and liable AI guidelines.

In addition, this signifier introduces a much systematic attack to travel deployment. Organizations commencement implementing automated deployment pipelines, incorporating practices specified arsenic continuous integration/continuous deployment (CI/CD). This automation enhances the ratio and reliability of deploying LLM applications, marking a determination towards much mature operational practices.

Monitoring and attraction besides germinate during this stage. Developers actively way assorted metrics to guarantee robust and liable operations. These see prime metrics similar groundedness and similarity, arsenic good arsenic operational metrics specified arsenic latency, mistake rate, and token consumption, alongside contented information measures.

At this signifier successful Contoso, developers ore connected creating divers punctual variations successful Azure AI punctual flow, refining them for enhanced accuracy and relevance. They utilize precocious metrics similar Question and Answering (QnA) Groundedness and QnA Relevance during batch runs to perpetually measure the prime of their LLM flows. After assessing these flows, they usage the punctual travel SDK and CLI for packaging and automating deployment, integrating seamlessly with CI/CD processes. Additionally, Contoso improves its usage of Azure AI Search, employing much blase RAG techniques to make much analyzable and businesslike indexes successful their vector databases. This results successful LLM applications that are not lone quicker successful effect and much contextually informed, but besides much cost-effective, reducing operational expenses portion enhancing performance.

Level Four—Optimized: Operational excellence and continuous improvement

At the pinnacle of the LLMOps maturity model, organizations scope a signifier wherever operational excellence and continuous betterment are paramount. This signifier features highly blase deployment processes, underscored by relentless monitoring and iterative enhancement. Advanced monitoring solutions connection heavy insights into LLM applications, fostering a dynamic strategy for continuous exemplary and process improvement.

At this precocious stage, Contoso’s developers prosecute successful analyzable punctual engineering and exemplary optimization. Utilizing Azure AI’s broad toolkit, they physique reliable and highly businesslike LLM applications. They fine-tune models similar GPT-4, Llama 2, and Falcon for circumstantial requirements and acceptable up intricate RAG patterns, enhancing query knowing and retrieval, frankincense making LLM outputs much logical and relevant. They continuously execute large-scale evaluations with blase metrics assessing quality, cost, and latency, ensuring thorough valuation of LLM applications. Developers tin adjacent usage an LLM-powered simulator to make synthetic data, specified arsenic conversational datasets, to measure and amended the accuracy and groundedness. These evaluations, conducted astatine assorted stages, embed a civilization of continuous enhancement.

For monitoring and maintenance, Contoso adopts broad strategies incorporating predictive analytics, elaborate query and effect logging, and tracing. These strategies are aimed astatine improving prompts, RAG implementations, and fine-tuning. They instrumentality A/B investigating for updates and automated alerts to place imaginable drifts, biases, and prime issues, aligning their LLM applications with existent manufacture standards and ethical norms.

The deployment process astatine this signifier is streamlined and efficient. Contoso manages the full lifecycle of LLMOps applications, encompassing versioning and auto-approval processes based connected predefined criteria. They consistently use precocious CI/CD practices with robust rollback capabilities, ensuring seamless updates to their LLM applications.

At this phase, Contoso stands arsenic a exemplary of LLMOps maturity, showcasing not lone operational excellence but besides a steadfast dedication to continuous innovation and enhancement successful the LLM domain.

Identify wherever you are successful the journey

Each level of the LLMOps maturity exemplary represents a strategical measurement successful the travel toward production-level LLM applications. The progression from basal knowing to blase integration and optimization encapsulates the dynamic quality of the field. It acknowledges the request for continuous learning and adaptation, ensuring that organizations tin harness the transformative powerfulness of LLMs efficaciously and sustainably.

The LLMOps maturity exemplary offers a structured pathway for organizations to navigate the complexities of implementing and scaling LLM applications. By knowing the favoritism betwixt exertion sophistication and operational maturity, organizations tin marque much informed decisions astir however to advancement done the levels of the model. The instauration of Azure AI Studio that encapsulated punctual flow, exemplary catalog, and the Azure AI Search integration into this model underscores the value of some cutting-edge exertion and robust operational strategies successful achieving occurrence with LLMs.

Learn more

- Take the 45-minute Get Started with punctual travel to make LLM apps module connected Microsoft Learn

- Leverage the solution template to enactment LLMOps into practice

Explore Azure AI Studio

Build, evaluate, and deploy your AI solutions each wrong 1 space

- Contoso is a fictional but typical planetary enactment gathering generative AI applications.

The station Achieve generative AI operational excellence with the LLMOps maturity model appeared archetypal connected Microsoft Azure Blog.

10 months ago

67

10 months ago

67