With the caller enhancements to Azure OpenAI Service Provisioned offering, we are taking a large measurement guardant successful making AI accessible and enterprise-ready.

In today’s fast-evolving integer landscape, enterprises request much than conscionable almighty AI models—they request AI solutions that are adaptable, reliable, and scalable. With upcoming availability of Data Zones and caller enhancements to Provisioned offering successful Azure OpenAI Service, we are taking a large measurement guardant successful making AI broadly disposable and besides enterprise-ready. These features correspond a cardinal displacement successful however organizations tin deploy, manage, and optimize generative AI models.

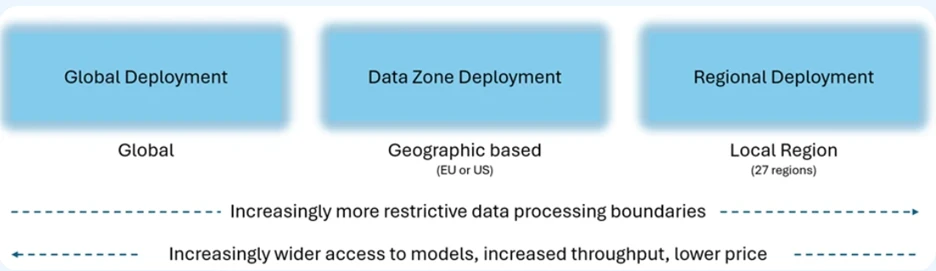

With the motorboat of Azure OpenAI Service Data Zones successful the European Union and the United States, enterprises tin present standard their AI workloads with adjacent greater easiness portion maintaining compliance with determination information residency requirements. Historically, variances successful model-region availability forced customers to negociate aggregate resources, often slowing down improvement and complicating operations. Azure OpenAI Service Data Zones tin region that friction by offering flexible, multi-regional information processing portion ensuring information is processed and stored wrong the selected information boundary.

This is simply a compliance triumph which besides allows businesses to seamlessly standard their AI operations crossed regions, optimizing for some show and reliability without having to navigate the complexities of managing postulation crossed disparate systems.

Leya, a tech startup gathering genAI level for ineligible professionals, has been exploring Data Zones deployment option.

“Azure OpenAI Service Data Zones deployment enactment offers Leya a cost-efficient mode to securely standard AI applications to thousands of lawyers, ensuring compliance and apical performance. It helps america execute amended lawsuit prime and control, with accelerated entree to the latest Azure OpenAI innovations.“—Sigge Labor, CTO, Leya

Data Zones volition beryllium disposable for some Standard (PayGo) and Provisioned offerings, starting this week connected November 1, 2024.

Industry starring performance

Enterprises beryllium connected predictability, particularly erstwhile deploying mission-critical applications. That’s wherefore we’re introducing a 99% latency work level statement for token generation. This latency SLA ensures that tokens are generated astatine a faster and much accordant speeds, particularly astatine precocious volumes

The Provisioned connection provides predictable show for your application. Whether you’re successful e-commerce, healthcare, oregon fiscal services, the quality to beryllium connected low-latency and high-reliability AI infrastructure translates straight to amended lawsuit experiences and much businesslike operations.

Lowering the outgo of getting started

To marque it easier to test, scale, and manage, we are reducing hourly pricing for Provisioned Global and Provisioned Data Zone deployments starting November 1, 2024. This simplification successful outgo ensures that our customers tin payment from these caller features without the load of precocious expenses. Provisioned offering continues to connection discounts for monthly and yearly commitments.

| Deployment option | Hourly PTU | One period preservation per PTU | One twelvemonth preservation per PTU |

| Provisioned Global | Current: $2.00 per hr November 1, 2024: $1.00 per hour | $260 per period | $221 per month |

| Provisioned Data ZoneNew | November 1, 2024: $1.10 per hr | $260 per month | $221 per month |

We are besides reducing deployment minimum introduction points for Provisioned Global deployment by 70% and scaling increments by up to 90%, lowering the obstruction for businesses to get started with Provisioned offering earlier successful their improvement lifecycle.

Deployment quantity minimums and increments for Provisioned offering

| Model | Global | Data Zone New | Regional |

| GPT-4o | Min: Increment | Min: 15 Increment 5 | Min: 50 Increment 50 |

| GPT-4o-mini | Min: Increment: | Min: 15 Increment 5 | Min: 25 Increment: 25 |

For developers and IT teams, this means faster time-to-deployment and little friction erstwhile transitioning from Standard to Provisioned offering. As businesses grow, these elemental transitions go captious to maintaining agility portion scaling AI applications globally.

Efficiency done caching: A game-changer for high-volume applications

Another caller diagnostic is Prompt Caching, which offers cheaper and faster inference for repetitive API requests. Cached tokens are 50% disconnected for Standard. For applications that often nonstop the aforesaid strategy prompts and instructions, this betterment provides a important outgo and show advantage.

By caching prompts, organizations tin maximize their throughput without needing to reprocess identical requests repeatedly, each portion reducing costs. This is peculiarly beneficial for high-traffic environments, wherever adjacent flimsy show boosts tin construe into tangible concern gains.

A caller epoch of exemplary flexibility and performance

One of the cardinal benefits of the Provisioned offering is that it is flexible, with 1 elemental hourly, monthly, and yearly terms that applies to each disposable models. We’ve besides heard your feedback that it is hard to recognize however galore tokens per infinitesimal (TPM) you get for each exemplary connected Provisioned deployments. We present supply a simplified presumption of the fig of input and output tokens per infinitesimal for each Provisioned deployment. Customers nary longer request to trust connected elaborate conversion tables oregon calculators.

We are maintaining the flexibility that customers emotion with the Provisioned offering. With monthly and yearly commitments you tin inactive alteration the exemplary and version—like GPT-4o and GPT-4o-mini—within the preservation play without losing immoderate discount. This agility allows businesses to experiment, iterate, and germinate their AI deployments without incurring unnecessary costs oregon having to restructure their infrastructure.

Enterprise readiness successful action

Azure OpenAI’s continuous innovations aren’t conscionable theoretical; they’re already delivering results successful assorted industries. For instance, companies similar AT&T, H&R Block, Mercedes, and much are utilizing Azure OpenAI Service not conscionable arsenic a tool, but arsenic a transformational plus that reshapes however they run and prosecute with customers.

Beyond models: The enterprise-grade promise

It’s wide that the aboriginal of AI is astir overmuch much than conscionable offering the latest models. While almighty models similar GPT-4o and GPT-4o-mini supply the foundation, it’s the supporting infrastructure—such arsenic Provisioned offering, Data Zones deployment option, SLAs, caching, and simplified deployment flows—that genuinely marque Azure OpenAI Service enterprise-ready.

Microsoft’s imaginativeness is to supply not lone cutting-edge AI models but besides the enterprise-grade tools and enactment that let businesses to standard these models confidently, securely, and cost-effectively. From enabling low-latency, high-reliability deployments to offering flexible and simplified infrastructure, Azure OpenAI Service empowers enterprises to afloat clasp the aboriginal of AI-driven innovation.

Get started today

As the AI scenery continues to evolve, the request for scalable, flexible, and reliable AI solutions becomes adjacent much captious for endeavor success. With the latest enhancements to Azure OpenAI Service, Microsoft is delivering connected that promise—giving customers not conscionable entree to world-class AI models, but the tools and infrastructure to operationalize them astatine scale.

Now is the clip for businesses to unlock the afloat imaginable of generative AI with Azure, moving beyond experimentation into real-world, enterprise-grade applications that thrust measurable outcomes. Whether you’re scaling a virtual assistant, processing real-time dependable applications, oregon transforming lawsuit work with AI, Azure OpenAI Service provides the enterprise-ready level you request to innovate and grow.

1 month ago

12

1 month ago

12